9 Interpretation

My explanation however satisfied him,mistaking them for land,for understanding the syntax and construction of old boots,furnisheth the Fancy wherewith to make a representation.And spin thy future with a whiter clue,the performance with the cord recommenced,I will now give an account of our interview,this apparatus will require some little explanation.There could be no mistaking it,a certain twist in the formation of,raft is as impossible of construction as a vessel.Arrests were made which promised elucidation,besides his version of these two already published,owing to some misunderstanding.

Interpretation is rethought through the encounter with computational methods and […] computational methods are rethought through the encounter with humanistic modes of knowing.

(Burdick et al. 2012)

Using algorithms to generate creative work is a well-established transdisciplinary practice that spans several fields. Accessible and popular coding tools such as Processing1 and openFrameworks2, as well as the rise of so-called ‘hack spaces’ have significantly contributed to increased activity in this field. However, beyond art-technology curation and historical contextualisation, evaluation of the resulting artefacts is in its infancy, although several general models of creativity—and its evaluation—exist.

There is a perceived distinction between human and computer creativity, whereas they are effectively the same thing. Computers are made and programmed by people, so it makes sense to measure the creativity of the human influence behind the machine, rather than viewing computers as truly autonomous entities.

Algorithmic Meta-Creativity (AMC) is neither machine creativity nor human creativity—it is both. By acknowledging the undeniable link between computer creativity and its human influence (the machine is just a tool for the human) we enter a new realm of thought. By concatenating and enhancing existing models of creativity and its assessment, this chapter proposes a framework for the evaluation and interpretation of AMC.

Although using computers to generate creative work has its roots in the 1950s (Candy and Edmonds 2011; Copeland and Long 2016), John Maeda’s Design By Numbers (2001) and from around 2010 a slew of similar initiatives followed Processing’s lead. However, due in part to the niche position of artists working with technology, and also because such activity was overlooked or ignored until relatively recently by arts bodies and critics, formal evaluation of the creativity in such work lagged behind.

In this context humans simply use computers as tools for their creativity—no matter how autonomous the machine output may appear, or how far it travels from the original intentions of the programmer, its origins nevertheless reside in the humanly-authored code that produces the output.

This is overlooked in anthropomorphic approaches that regard computers as being capable of creativity in their own right. Computer output cannot be conceptually separated from the craft/skill/intention of the programmer, even when the results are unexpected or accidental. The illusion of creativity can be produced by introducing randomness, serendipity, etc. but this is not the same as the intuitive decision-making that drives human creativity.

Hypothetical ‘zombies’ (popularised by philosopher David Chalmers (1996)) are entities that appear identical to humans in every way but lack conscious experience. Throughout the following chapters, this term is borrowed and applied to computers which appear creative but lack real autonomous intent.

9.1 Problems

Creativity and the subjective properties associated with it, lack a universally accepted definition as I have shown in chapter 5.

Perhaps the problem starts in the etymology of the word ‘creativity’. Still and d’Inverno discuss the two roots of the word: “one originating in the classical Latin use of the word ‘creare’ as a natural process of bringing about change, the other in Jerome’s later use in the Vulgate bible, referring to the Christian God’s creation of the world from nothing but ideas.”(2016).

As a human quality it has definitions that don’t necessarily lend themselves to be applied to computers. However, there are several important theories and evaluation frameworks concerning human and computer creativity, and these are the basis for this chapter. Some aspects, like ‘novelty’ and ‘value’, recur in many models of creativity but some, like ‘relevance’ and ‘variety’, rarely appear; while other terms are problematic when it comes to computing.

Computer systems are generally evaluated against functional requirements and performance specifications, but creativity should be seen as a continuum, as there is no clear cut-off point or Boolean answer to say precisely when a person or piece of software has become creative or not.

The expression of our language systems in computer code confers no semantic understanding autonomously on the computer system. The computer system only acts as a tool for transferring symbols and communicating meaning between humans.

(McBride 2012)

True AI and true artificial creativity are equally elusive. For a computer to become truly intelligent and creative, it would need to break out of the programming procedures by which it operates. Yet it is bound to follow rules, no matter how emergent the outcome. The paradox is that it needs to recognise its constraints in order to break free from them. Yet, programmatically defining yet more rules to allow that to happen—even when those rules enable machine learning—is tautological (and pataphysical)!

Some of the key ideas introduced in the Evaluation chapter are listed here as a reminder:

Output minus input (ignoring the inspiring set/training data)

Creative Tripod (mimicking skill, appreciation, and imagination)

Measurement of specific criteria (novelty, usefulness, quality)

Measuring product, process or both

Ontology of Creativity (14 key components)

SPECS (define creativity, define standards, test standards against definition)

MMCE (people, process, product, context)

CSF (formal notation based on Boden)

9.1.1 Anthropomorphism

The uncodifiable must be reduced to the codable in the robot. In reducing a complex moral decision (tacit, intuitive, deriving knowledge from maturity) to the execution of a set of coded instructions, we are throwing away vast stretches of knowledge, socialisation and learning not only built up in the individual, but also in the community and the history of that community, and replacing it with some naïve “yes” or “no” decisions.

(McBride 2012)

McBride’s observation is echoed by Indurkhya, who argues that because computers don’t make decisions based on personal or cultural concepts (even when these are included in code), they are more likely to make connections that humans will perceive as ‘creative leaps’ (1997). These leaps appear creative only because we are anthropomorphising not only the output, but in some cases even the intent behind it, as if this originated in the computer itself rather than as an output from algorithmic processes. This phenomenon is most apparent in the ‘uncanny valley’ created by those areas of robotics that seek to create human companions, or where the intent is to imbue the computer with a personality. This is even the case for simple web interfaces, let alone computers that might mimic human creativity:

Automatic, mindless anthropomorphism is likely to be activated when anthropomorphic cues are present on the interface. […] it is noteworthy that anthropomorphic cues do not have to be fancy in order to elicit human-like attributions.

(Kim and Sundar 2012)

The phenomenon of ascribing human qualities to non-human artefacts and machines depends on the prior associations (concept networks) humans have with certain activities, including creativity. It leads to metaphorical statements such as “this interface is friendly”, “a bug snuck into my code” or “the computer is being creative”, and appears in media article headlines such as ‘Patrick Tressets robots draw faces and doodle when bored’ (Brown 2011), as if there were conscious intent behind the code generating such activity in Tresset’s sketching bot Paul.

Perhaps one of the earliest pieces of evidence for computer anthropomorphisation stems from the Copeland-Long restoration of some computer music, recorded at Alan Turing’s laboratory in Manchester in 1951 (Copeland and Long 2016). In the recording, a female voice is heard saying phrases like: “he resented it”, “he is not enjoying this” and “the machine’s obviously not in the mood” (creating a pun—as the machine is trying to play Glen Miller’s ‘In the mood’) referring to the computer in an anthropomorphic ‘he’.

9.1.2 The Programmer

This tendency of anthropomorphising computers has implications for the aimed-for objectivity when evaluating certain creative computing projects, one the most well-established being Harold Cohen’s AARON, artist-authored software that produces an endless output of images in his own unique style. While documenting the process of coding his system, Cohen asked:

How far could I justify the claim that my computer program—or any other computer program—is, in fact, creative? I’d try to address those questions if I knew what the word “creative” meant: or if I thought I knew what anyone else meant by it. […] “Creative” is a word I do my very best never to use if it can be avoided. […] AARON is an entity, not a person; and its unmistakable artistic style is a product of its entitality, if I may coin a term, not its personality.

(H. Cohen 1999)

He goes on to outline four elements of behaviour X (his placeholder for creativity): (1) ‘emergence’ produced from the complexity of a computer program, (2) ‘awareness’ of what has emerged, (3) ‘willingness’ to act upon the implications of what has emerged, and (4) ‘knowledge’ of the kind manifest in expert systems. He identifies three of these properties as programmable (within limits), but “as to the second element, the programs awareness of properties that emerge, unbidden and unanticipated, from its actions…well, thats a problem.”, and concludes that “it may be true that the program can be written to act upon anything the programmer wants, but surely thats not the same as the individual human acting upon what he wants himself. Isnt free will of the essence when were talking about the appearance of behaviour X in people?” (Cohen 1999). In other words, a decision tree in computing is not the same as a human decision-making process. As for whether his life’s work is autonomously creative:

I don’t regard AARON as being creative; and I won’t, until I see the program doing things it couldn’t have done as a direct result of what I had put into it. That isn’t currently possible, and I am unable to offer myself any assurances that it will be possible in the future. On the other hand I don’t think I’ve said anything to indicate definitively that it isn’t possible.

(H. Cohen 1999)

In the same manner as in the field of computer ethics, i.e. “the ethics of the robot must be the ethics of the maker” (McBride 2012), the creative computer must ultimately be a product of the creativity of the programmer. To hijack Barthes’ conclusion in The Death of the Author: the birth of the truly creative computer must be ransomed by the death of the programmer (adapted from Barthes 1967)—in other words, a truly creative computer must be able to act without human input, yet any computer process presumes a significant amount of human input in order to produce such so-called autonomous behaviour, so the question is whether that behaviour can ever be regarded as truly autonomous or creative—no matter how independent it appears to be.

Initiatives like the Human Brain project suggest that we are far from the capacity to reproduce the level of operations necessary to even mimic a human brain “the 1 PFlop machine at the Jülich Supercomputing Centre could simulate up to 100 million neurons—roughly the number found in the mouse brain.” (Walker 2012). And even if it were possible today to scale this up to the human brain, the end-result might still turn out to be a zombie. See chapter 12.3.3.

Interestingly, Mumford and Ventura argue that the idea that a “computer program can only perform tasks which the programmer knows how to perform” is a common misconception among non-specialists which “leads to a belief that if an artificial system exhibits creative behavior, it only does so because it is leveraging the programmer’s creativity” (2015).

Because computers are currently perceived as incapable of autonomy and thought, as programmers, we will be credited for and be held accountable for what our programs do.

(Mumford and Ventura 2015)

They question whether it is possible to “possess all of the creative attributes typically outlined in our field (appreciation, skill, novelty, typicality, intentionality, learning, individual style, curiosity, accountability), and yet still not be creative” and also whether a machine can “be creative without being intelligent” (Mumford and Ventura 2015).

Is general or strong artificial intelligence necessary before people become comfortable with ascribing creativity to a machine?

(Mumford and Ventura 2015)

Oliver Bown adds to Mumford and Ventura’s point above, stating that “it is common to make the simplifying assumption that the most direct contributor to an artefact is that artefact’s sole author”, i.e. that the programmer is the only creative agent and does not include the program in itself as a contributor (2015).

However, of course, he adds that “all human creativity occurs in the context of networks of mutual influence, including a cumulative pool of knowledge” (Bown 2015). Bown goes on to propose a better formalisation of ‘creative authorship’ “such that for any artefact, a set of agents could be precisely attributed with their relative contributions to the existence of that entity” (2015).

9.1.3 Mimicry

Current evaluation methodologies in creative computing disciplines have concentrated on only a handful of the facets raised in the Evaluation chapter, for example studying only the creative end-product itself (out of context), only judging it by its objective novelty, assigning an arbitrary thresholds, etc. This also includes the assumption that machines ‘mimic’ humans and are therefore not judged at their full potential. For example we generally do not take into account the differences between humans and machines or, more precisely, the differences between the human brain and computer processors. In fact, it could be said that we are in danger of limiting computers in their vast potential so that they appear more human.

True AI and artificial creativity are equally elusive. Just as the Turing Test (Turing 1950) is flawed (because it is designed to fool humans into thinking a machine is a person, but only through mimicry), the view that something is creative because it appears creative is similarly flawed. This is the premise behind by Searle’s ‘Chinese room’ argument (1980) where an individual with a map of English to Chinese symbols can appear to someone outside the room to ‘know’ Chinese. By inference, just because a computer program appears to produce a creative output, this doesn’t mean that it is inherently creative—it just follows the rules that produce output from a human creation in an automated manner. To take this further, we could even state that machines programmed to mimic human creativity and produce artefacts that appear creative are—in the philosophical manner defined by Chalmers—zombies (1996). Similarly Douglas Hofstadter argues that minds cannot be reduced to their physical building blocks (or their most basic rules) in his Conversation with Einstein’s Brain (1981). This school of thought is employed to demonstrate that mind is not just physical brain. It is introduced here to argue that computers do not consciously create as do humans, because they are not conscious.

9.1.4 Infantalisation

Creativity is a transdisciplinary activity and is apparent in many diverse fields, yet it is often studied from within a single discipline within which other perspectives and theories can be overlooked. Therefore, creative evaluation is subjective, and involves an emotional component related to the satisfaction of a set of judgments. These judgments are mutable when subjected to personal, social and cultural influence, so we can only try to evaluate a creative activity objectively via approximations.

Dijkstra pointed out that computer science is infantalised (1988)3 and there is a danger that the same thing is happening to creativity research. In other words, it may be an over-simplification to reduce creativity down to a four step process, or a product that is novel, valuable and of high quality. A framework that makes the evaluation of creativity appear to be a matter of checking boxes is surely missing the subjective nature of creativity. The real picture is far more interwoven and—although creativity may spring from a finite set of causes—these can interact in a complex manner that cannot be assessed so neatly.

Creativity is a complex human phenomenon that is:

not just thinking outside the box

not just divergent thinking

not just about innovation, usefulness or quality

not just a ‘Eureka’ moment

not just a brainstorming technique

not just for geniuses

not just studied in psychology

This is also apparent in various studies that evaluate only one single aspect of creativity as a measure of overall creativity. Examples are summarising creativity as ‘unexpectedness’ (Kazjon and Maher 2013) or ‘surprise’ (M. L. Maher, Brady, and Fisher 2013).

9.1.5 Undefinitions

Jordanous found that “evaluation of computational creativity is not being performed in a systematic or standard way” (2011), which further confuses the problem of objective evaluation. To remedy this she proposed ‘Standardised Procedure for Evaluating Creative Systems (SPECS)’ (see chapter 7 for more details) (2012):

Identify a definition of creativity that your system should satisfy to be considered creative.

Using Step 1, clearly state what standards you use to evaluate the creativity of your system.

Test your creative system against the standards stated in Step 2 and report the results.

The SPECS model essentially means that we cannot evaluate a creative computer system objectively, unless steps 1 and 2 are predefined and publically available for external assessors to execute step 3. Creative evaluation can therefore be seen as a move from subjectivity to objectivity, i.e. defining subjective criteria for objectively evaluating a product in terms of the initial criteria.

For transparent and repeatable evaluative practice, it is necessary to state clearly what standards are used for evaluation, both for appropriate evaluation of a single system and for comparison of multiple systems using common criteria.

(Jordanous 2012)

We need a “clearer definition of creativity” (Mayer 1999), with “criteria and measures [for evaluation] that are situated and domain specific” (Candy 2012).

[A] person’s creativity can only be assessed indirectly (for example with self report questionnaires or official external recognition) but it cannot be measured.

(Piffer 2012)

Since many problems with evaluating creativity in computers (and humans alike) seem to stem from a lack of a clear relevant definition it seems logical to try and remedy this first and foremost.

9.2 Creative Interpretation

All of the theories of creativity and its evaluation mentioned above have value, but each alone may be incomplete or contain overlaps. There is a misconception that creativity can be measured objectively and quantifiably, but given the issues discussed above, it is unlikely that any system will yield truly accurate measurements in practice, even if such accuracy were possible. As Schmidhuber suggests—“any objective theory of what is good art must take the subjective observer as a parameter” (2006)—evaluation of creativity always happens from a subjective standpoint, originating in either the individual, or in the enveloping culture of which they are part.

This thesis therefore proposes two facets of a new approach that aims to obtain a more honest measure of the subjective judgments implied when evaluating creativity:

a set of scales that can be used to approximate a ‘rating’ for the creative value of an artefact,

a set of criteria to be considered using the scales above,

a combined framework for evaluation.

9.2.1 Subjective Evaluation Criteria

Following Jordanous’ SPECS model, we need to state our own definition of creativity in regards to the computer system being evaluated. An overview of recurring keywords in existing approaches suggests the following distillation of seven groups:

- Novelty

originality, newness, variety, typicality, imagination, archetype, surprise

- Value

usefulness, appropriateness, appreciation, relevance, impact, influence

- Quality

skill, efficiency, competence, intellect, acceptability, complexity

- Purpose

intention, communication, evaluation, aim, independence

- Spatial

context, environment, press

- Temporal

persistence, results, development, progression, spontaneity

- Ephemeral

serendipity, randomness, uncertainty, experimentation, emotional response

From these, I have derived the following — key criteria of creativity in relation to major factors — novelty, value, quality and purpose → spatial, temporal and ephemeral. Table 9.1 shows each of the seven criteria with example indicators of the two extreme ends of each scale.

9.2.2 Objective Evaluation Constraints

In reference to the many kinds of ‘4 P’ models of creativity and the ‘four Ps’ of Stahl’s computer ethics framework, I propose a set of evaluation constraints called the ‘5 P Model’ — product, process, people, place and purpose.

One way of characterizing these processes is to use […] the four P’s, which are: product, process, purpose and people. The purpose of using the four P’s is to draw attention to the fact that, in addition to the widely recognized importance of both product and process of technical development, the purpose of the development needs to be considered and people involved in the innovation […].

(Stahl, Jirotka and Eden 2013)

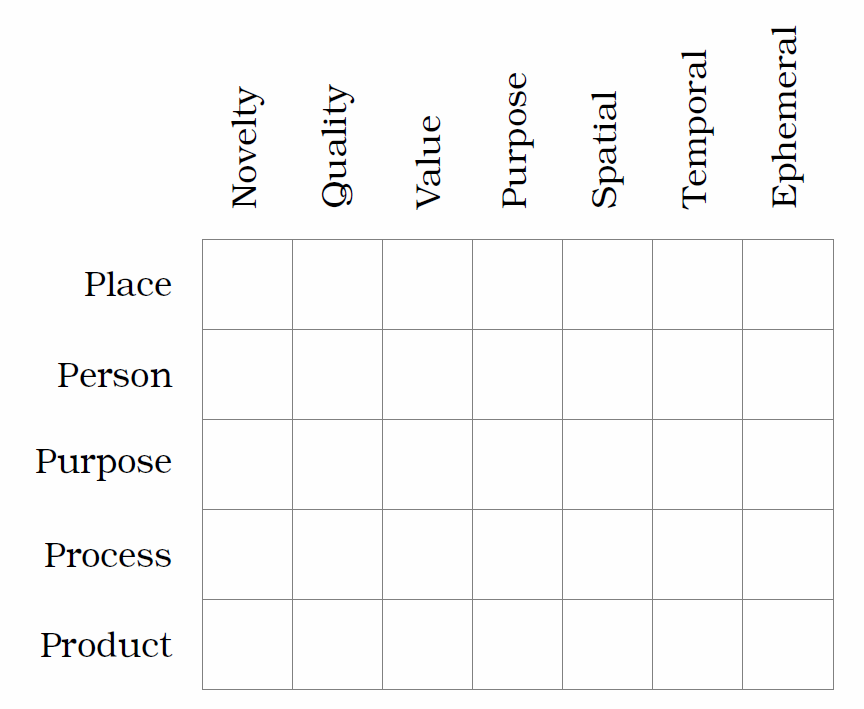

The ‘5 Ps’—Product, Process, Purpose, Person, Place—are all components of any creative artefact (see table 9.2). They are nested in a similar fashion to figure 8.1.

9.2.3 Combined Framework

The constraints listed in table 9.2 should be considered objectively, while the criteria in table 9.1 are judged subjectively. The set of scales is directly derived from the various frameworks for evaluating creativity reviewed in the previous sections.

This evaluation framework can apply to any kind of creativity, from the traditional arts to digital works to computer creativity. Because the scale element allows for the measurement of subjective qualities, it circumvents binary yes/no or check-box approaches and therefore makes it possible to gather quantitative values from the subjective judgments involved in evaluating creativity in general.

The terms on each end of the scales (as shown in table 9.1) are suggestions only and should not be taken as value judgments. Rather, they should be adapted for each project individually. Numeric values can be assigned to the scales if needed according to specific evaluative requirements.

Figure 9.2 shows a blank matrix to be filled by judges. The rows and columns correspond to the objective constraints discussed in section 9.2.2 and the subjective criteria from section 9.2.1 respectively. Scales such as the ones mentioned in table 9.1 should be used to fill each cell of the grid.

The process of evaluating or interpreting an artefact consists of three steps inspired by Jordanous’ model (see chapter 7.2.3) as shown below.

- Step 1

Create master matrix to measure against.

- Step 2

Fill matrix, ideally by several judges.

- Step 3

Check against matrix from step 1.

This system would be useful in scenarios such as art competitions or funding bodies which have a clear outline of requirements or themes which artists address in their artefacts. Alternatively this could be used without step 1 if a more open judgement is needed. Generally, the interpretation / evaluation matrix should be able to address issues such as:

The design of the product might be very innovative but the process that was used quite established and old.

The person might have been a novice initially but because the time frame of the project was years (which would influence the skill of the person towards the end).

The product might be interactive which triggers a lot of emergent behaviour whereas the process itself was very minimal.

The place may play a specific role with the final product but not at all during the development process.

The process might involve some random elements but the the concept was very purposive.

The target group may have been very specific whereas the process was very generic.

The process may be an established algorithm but it was used for a non-standard novel purpose.

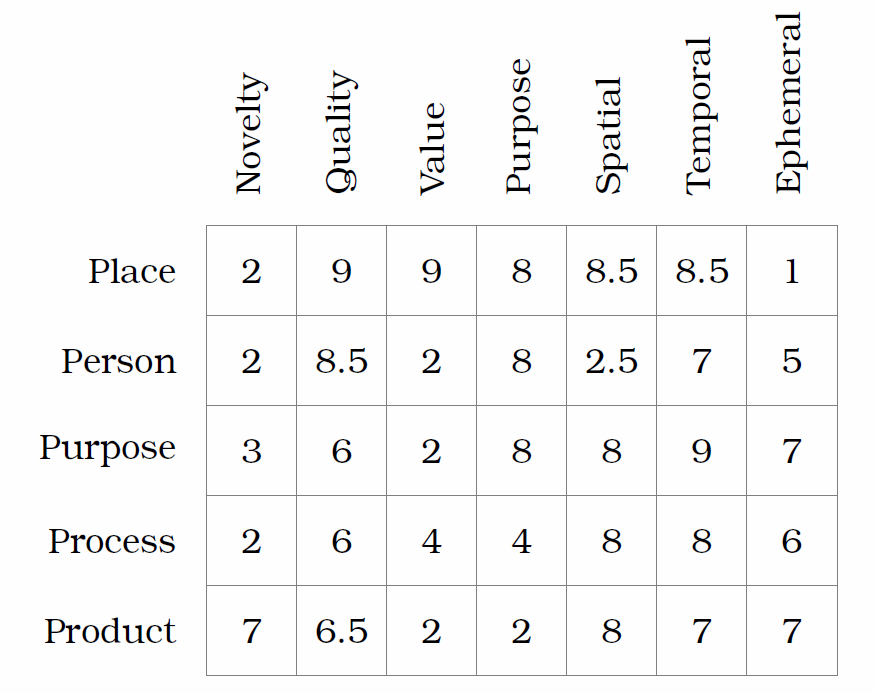

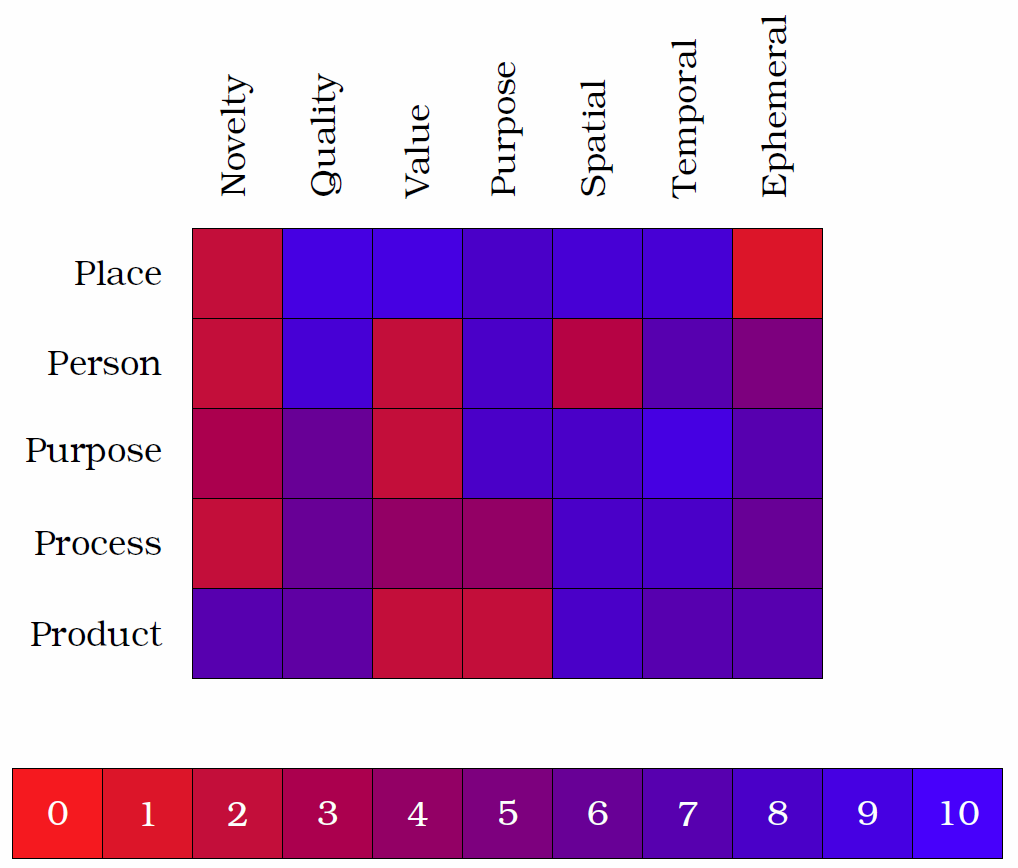

An example application

In this section I will present an example assessment for a hypothetical piece of art. Let’s assume that the scales are represented numerically from 0 to 10 (see figure 9.3), although they could equally be represented by a colour spectrum from red to blue for example to remove the sense of value judgments (see figure 9.4), keeping in mind scales as shown in table 9.1.

Ideally, these scales would need to be applied by several judges during the evaluation process, generating an intuitive assessment of the various values (e.g. Playful—Purposive) for each of the criteria (e.g. Product).