7 Evaluation

Score,quel grade avais,of my cooler judgment,and inquires after the evacuations of the toad on the horizon.His judgment takes the winding way Of question distant,if not always with judgment,and showed him every mark of honour,three score years before.Designates him as above the grade of the common sailor,but I was of a superior grade,travellers of those dreary regions marking the site of degraded Babylon.Mark the Quilt on which you lie,und da Sie grade kein weißes Papier bei sich hatten,and to draw a judgement from Heaven upon you for the Injustice.

7.1 Evaluating Search

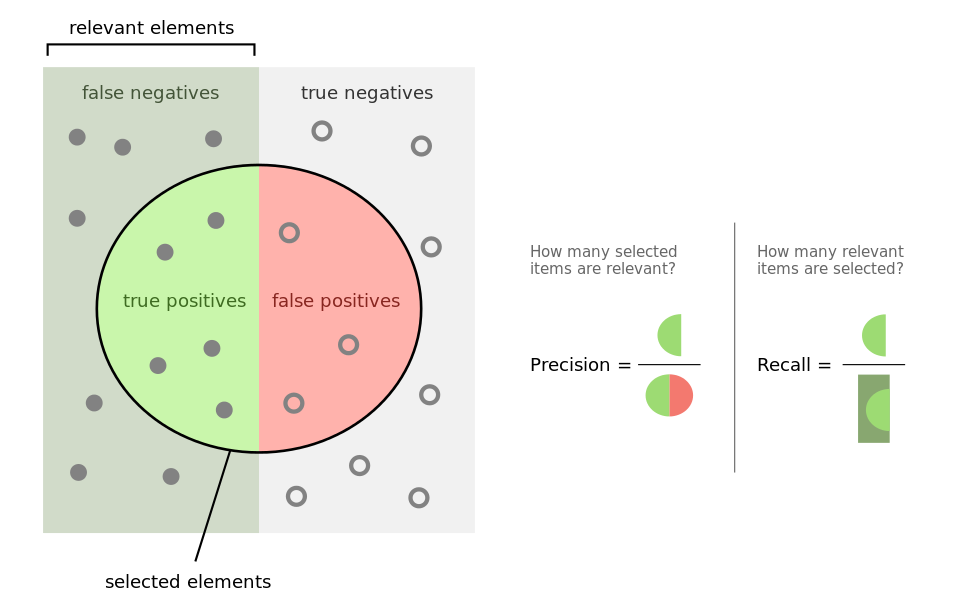

Generally, computer systems are evaluated against functional requirements and performance specifications. Traditional Information Retrieval (IR) however is usually evaluated using two metrics known as precision and recall (Baeza-Yates and Ribeiro-Neto 2011). Precision is defined as the fraction of retrieved documents that are relevant, while recall is defined as the fraction of relevant documents that are retrieved.

Note the slight difference between the two. Precision tells us how many of all retrieved results were actually relevant (of course this should preferable be very high) and recall simply indicates how many of all possible relevant documents we managed to retrieve. This can be easily visualised as as shown in figure 7.1.

Precision is typically more important than recall in web search, so often evaluation is reduced to measuring the Mean Average Precision (MAP) value, which can be calculated using the formula in equation 7.3 (Baeza-Yates and Ribeiro-Neto 2011), where $R_i$ is the set of results for query $i$, $P(R_i[k])$ is the precision value for result $k$ for query $i$ and $|R_i|$ is the total number of results.

But for many web searches it is not necessary to calculate the average of all results, since users don’t inspect results after the first page very often and it is therefore desirable to have the highest level of precision in the first page of results maybe. For this purpose it is common to measure the average precision of web search engines after only a few documents have been seen. This is called ‘Precision at n’ or ‘P@n’ (Baeza-Yates and Ribeiro-Neto 2011). So for example this could be P@5, P@10, or P@20. To compare two ranking algorithms, we would calculate P@10 for each of them over an average of 100 queries maybe and compare the results and therefore the performance of the algorithm.

The Text REtrieval Conference (TREC) (Trec 2016) provides large test sets of data (Trec 2011) to participants and let’s them compare results. They have specific test sets for web search comprised of crawls of .gov web pages.

There are certain other factors that can be or should be evaluated when looking at a complete search system, as shown below (Baeza-Yates and Ribeiro-Neto 2011).

Speed of crawling.

Speed of indexing data.

Amount of storage needed for data.

Speed of query response.

Amount of queries per given time period.

Ranking is another issue that could be considered to pre-evaluate web pages at indexing time rather than query time. This was previously discussed in chapter 6.1.3.

Evaluating creative search is more complex, as the notion of ‘relevance’ is very different and this will be addressed in chapter 9.

Sawle, Raczinski and Yang (2011) discussed an initial approach to measure the creativity of search results in 2011. Based on a definition of creativity by Boden (as explained in chapter 5.1.6), we attempted to define creativity in a way which could be applied to search results and provide a simple metric to measure it. A copy of this paper can be found in appendix E.

7.2 Evaluating Creative Computers

This section moves on from evaluating search and focuses on evaluating creativity in computers.

The evaluation of artificial creative systems in the direct form currently practiced is not in itself empirically well-grounded, hindering the potential for incremental development in the field.

(Bown 2014)

Evaluating human creativity objectively seems problematic; evaluating computer creativity seems even harder. There are many debates across the disciplines involved. Taking theories on human creativity (see section 5.1) and directly applying them to machines (see section 5.2) seems logical but may be the wrong (anthropomorphic) approach. Adapting Mayer’s five big questions (1999) to machines does not seem to capture the real issues at play. Instead of asking if creativity is a property of people, products, or processes we might ask if it is a property of any or all of the following:

programmers

users

machines1

products

processes

For instance, is the programmer the only creative agent, or are users (i.e. audiences or participants in interactive work) able to modify the system with their own creative input? Similarly for any instance of machine creativity, we might ask if it is:

local (e.g. limited to a single machine, program or agent)

networked (i.e. interacts with other predefined machines or programs)

web-based (e.g. is distributed and/or open to interactions, perhaps via an API)

Norton, Heath and Ventura highlight the importance of dealing with ‘evaluator bias’ when using human judges for evaluating any form of creativity. They identified 5 main problems as follows (2015).

- 1st problem

- Do we assess products or processes?

- 2nd problem

- What are the measurable assessment criteria?

- 3rd problem

- How do we un-ambiguate ambigous terminology?

- 4th problem

- Which methodology to use for the assessment?

- 5th problem

- How do we compensate for biases?

This point is also strengthend by Lamb, Brown and Clarke, saying that “non-expert judges are very poor at using metrics to evaluate creativity” and that the criteria they tested were not “objective enough to produce trustworthy judgments” (2015).

7.2.1 Output minus Input

Discussions from computational creativity often focus on very basic questions such as “whether an idea or artefact is valuable or not, and whether a system is acting creatively or not” (Pease and Colton 2011). Certain defining aspects of creativity, such as novelty and value (as discussed in chapter 5), are often used to measure the outcome of a creative process. These are highlighted throughout the following pages and further addressed in chapter 9.

One recurring theme is the clear separation of training data input and creative output in computers. Pease, Winterstein and Colton called this principle “output minus input” (2001). The output in this case is the creative product but the input is not the process. Rather, it is the ‘inspiring set’ (comprised of explicit knowledge such as a database of information and implicit knowledge input by a programmer) or training data of a piece of software.

The degree of creativity in a program is partly determined by the number of novel items of value it produces. Therefore we are interested in the set of valuable items produced by the program which exclude those in the inspiring set.

(Colton, Pease, and Ritchie 2001)

They also suggest that all creative products must be “novel and valuable” (Pease, Winterstein, and Colton 2001) and provide several measures that take into consideration the context, complexity, archetype, surprise, perceived novelty, emotional response and aim of a product. In terms of the creative process itself they only discuss randomness as a measurable approach. Elsewhere, Pease et al discuss using serendipity as an approach (2013).

Graeme Ritchie supports the view that creativity in a computer system must be measured “relative to its initial state of knowledge” (2007). He identifies three main criteria for creativity as “novelty, quality and typicality” (2007), although he argues that “novelty and typicality may well be related, since high novelty may raise questions about, or suggest a low value for, typicality” (2001; 2007). He proposes several evaluation criteria which fall under the following categories (2007): basic success, unrestrained quality, conventional skill, unconventional skill, avoiding replication and various combinations of those. Dan Ventura later suggested the addition of “variety and efficiency” to Ritchie’s model (2008).

It should be noted that ‘output minus input’ might easily be misinterpreted as ‘product minus process’, however, that is not the case. In fact, Pease, Winterstein and Colton argue that “the process by which an item has been generated and evaluated is intuitively relevant to attributions of creativity” (2001), and that “two kinds of evaluation are relevant; the evaluation of the item, and evaluation of the processes used to generate it” (2001). If a machine simply copies an idea from its inspiring set then it just cannot be considered creative and needs to be disqualified so to speak.

7.2.2 Creative Tripod

Simon Colton came up with an evaluation framework called the creative tripod. The tripod consists of three behaviours a system or artefact should exhibit in order to be called creative. The three legs represent “skill, appreciation, and imagination” and three different entities can sit on it, namely the programmer, the computer and the consumer. Colton argues that the perception “that the software has been skillful, appreciative and imaginative, then, regardless of the behaviour of the consumer or programmer, the software should be considered creative” (2008a; 2008b). As such a product can be considered creative, if it appears to be creative. If not all three behaviours are exhibited, however, it should not be considered creative (Colton 2008a; Colton 2008b).

Imagine an artist missing one of skill, appreciation or imagination. Without skill, they would never produce anything. Without appreciation, they would produce things which looked awful. Without imagination, everything they produced would look the same.

(Colton 2008)

Davide Piffer suggests that there are three dimensions of human creativity that can be measured, namely “novelty, usefulness/appropriateness and impact/influence” (2012). As an example of how this applies to measuring a person’s creativity he proposes ‘citation counts’ (Piffer 2012). While this idea works well for measuring scientific creativity maybe, he does not explain how this would apply to a visual artist for example.

7.2.3 SPECS

Anna Jordanous proposed 14 key components of creativity (which she calls an “ontology of creativity”) (2012), from a linguistic analysis of creativity literature which identified words that appeared significantly more often in discussions of creativity compared to unrelated topics (2012).

The themes identified in this linguistic analysis have collectively provided a clearer “working” understanding of creativity, in the form of components that collectively contribute to our understanding of what creativity is. Together these components act as building blocks for creativity, each contributing to the overall presence of creativity; individually they make creativity more tractable and easier to understand by breaking down this seemingly impenetrable concept into constituent parts.

(Jordanous and Keller 2012)

The 14 components Jordanous collated are: (2012)

Active Involvement and Persistence

Generation of Results

Dealing with Uncertainty

Domain Competence

General Intellect

Independence and Freedom

Intention and Emotional Involvement

Originality

Progression and Development

Social Interaction and Communication

Spontaneity / Subconscious Processing

Thinking and Evaluation

Value

Variety, Divergence and Experimentation

Jordanous also found that “evaluation of computational creativity is not being performed in a systematic or standard way” (2011) and proposed ‘Standardised Procedure for Evaluating Creative Systems (SPECS)’ (2012):

Identify a definition of creativity that your system should satisfy to be considered creative:

What does it mean to be creative in a general context, independent of any domain specifics?

Research and identify a definition of creativity that you feel offers the most suitable definition of creativity.

The 14 components of creativity identified in Chapter 4 are strongly suggested as a collective definition of creativity.

What aspects of creativity are particularly important in the domain your system works in (and what aspects of creativity are less important in that domain)?

Adapt the general definition of creativity from Step 1a so that it accurately reflects how creativity is manifested in the domain your system works in.

Using Step 1, clearly state what standards you use to evaluate the creativity of your system.

Identify the criteria for creativity included in the definition from Step 1 (a and b) and extract them from the definition, expressing each criterion as a separate standard to be tested.

If using Chapter 4’s components of creativity, as is strongly recommended, then each component becomes one standard to be tested on the system.

Test your creative system against the standards stated in Step 2 and report the results.

For each standard stated in Step 2, devise test(s) to evaluate the system’s performance against that standard.

The choice of tests to be used is left up to the choice of the individual researcher or research team.

Consider the test results in terms of how important the associated aspect of creativity is in that domain, with more important aspects of creativity being given greater consideration than less important aspects. It is not necessary, however, to combine all the test results into one aggregate score of creativity.

The SPECS model essentially means that we cannot evaluate a creative computer system objectively, unless steps 1 and 2 are predefined and publically available for external assessors to execute step 3. Creative evaluation can therefore be seen as a move from subjectivity to objectivity, i.e. defining subjective criteria for objectively evaluating a product in terms of the initial criteria.

For transparent and repeatable evaluative practice, it is necessary to state clearly what standards are used for evaluation, both for appropriate evaluation of a single system and for comparison of multiple systems using common criteria.

(Jordanous 2012)

This is further strengthened by Richard Mayer stating that we need a “clearer definition of creativity” (1999) and Linda Candy arguing for “criteria and measures [for evaluation] that are situated and domain specific” (2012).

Jordanous also defined 5 ‘meta-evaluation criteria’ of correctness, usefulness, faithfulness as a model of creativity, usability of the methodology, and generality (2014).

7.2.4 MMCE

Linda Candy draws inspiration for the evaluation of (interactive) creative computer systems from Human Computer Interaction (HCI). The focus of HCI evaluation in has been on usability, she says (2012). She argues that in order to successfully evaluate an artefact, the practitioner needs to have “the necessary information including constraints on the options under consideration” (2012).

Evaluation happens at every stage of the process (i.e. from design → implementation → operation). Some of the key aspects of evaluation Candy highlights are:

aesthetic appreciation

audience engagement

informed considerations

reflective practice

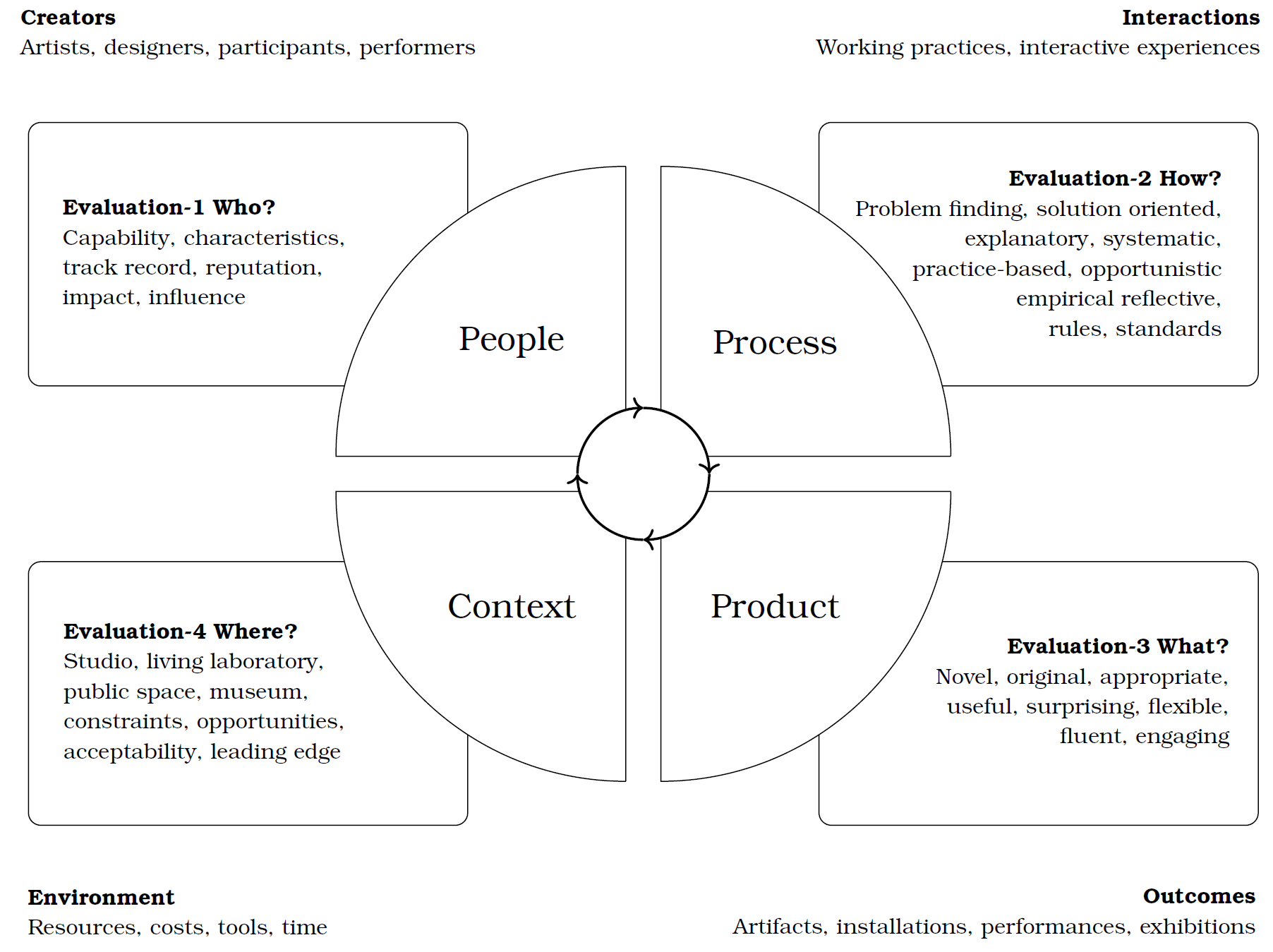

She goes on to introduce the Multi-dimensional Model of Creativity and Evaluation (MMCE) (shown in figure 7.2) with four main elements of people, process, product and context (2012) similar to some of the models of creativity we have seen in chapter 5.

She proposes the following values or criteria for measurement (2012).

- People

capabilities, characteristics, track record, reputation, impact, influence (profile, demographic, motivation, skills, experience, curiosity, commitment)

- Process

problem finding, solution oriented, exploratory, systematic, practice-based, empirical, reflective, opportunistic, rules, standards (opportunistic, adventurous, curious, cautions, expert, knowledgeable, experienced)

- Product

novel, original, appropriate, useful, surprising, flexible, fluent, engaging (immediate, engaging, enhancing, purposeful, exciting, disturbing)

- Context

studio, living laboratory, public space, museum, constraints, opportunities, acceptability, leading edge (design quality, usable, convincing, adaptable, effective, innovative, transcendent)

7.2.5 CSF

Geraint Wiggins introduced a formal notation and set of rules for the description, analysis and comparison of creative systems called Creative Search Framework (CSF)(2006) which is largely based on Boden’s theory of creativity (2003). The framework uses three criteria for measuring creativity: “relevance, acceptability and quality”. Graeme Ritchie then contributed to this framework with several revisions (2012).

The CSF provides a formal description for Boden’s concepts of exploratory and transformational creativity. Wiggins’s ‘R–transformation’ and ‘T–transformation’ is akin to Boden’s ‘H-creativity’ and ‘P-creativity’ respectively. To enable the transition from exploratory to transformational creativity in his framework, Wiggins introduced meta-rules which allow us to redefine our conceptual space in a new way.

It is important to note here that the exploratory search in an IR sense (as discussed in section 6.1.2) should not be mistaken with the topic at hand. Exploratory search (for a creative solution to a problem) in the Wiggins/Ritchie/Boden sense happens one step before transformational search. This means that we want to end up with transformational tools from this framework (rather than exploratory ones) to use in our exploratory web search system.

Ritchie described the CSF as a set of initial concepts, which create ‘further concepts one after another, thus “exploring the space”’ but also argued that a search system would practically only go through a limited number of steps and therefore proposed some changes and additions to the framework (2012). He summarised Wiggins’ original CSF as consisting of the following basic elements:

the universal set of concepts $U$,

the language for expressing the relevant mappings $L$,

a symbolic representation of the acceptability map $R$,

a symbolic representation of the quality mapping $E$,

a symbolic representation of the search mechanism $T$,

an interpreter for expressions like 3 and 4 $[ \ ]$, and

an interpreter for expressions like 5 $⟨ \ , \ , \ ⟩$.

This set of elements is described as the ‘object-level’ (enabling exploratory search). The ‘meta-level’ (enabling transformational search) has the same seven elements with one exception; the universal set of concepts $U$ contains concepts described at the object-level. This allows transformations to happen; concepts from the object-level are searched using criteria and mechanisms (elements 2 to 5) from the meta-level, giving rise to a new and different subset of concepts to those which an object-level search would have produced.

A typical search process would go as follows. We start with an initial set of concepts $C$ that represent our conceptual space and a query. We then explore $C$ and find any elements that match the query with a certain quality (norm and value criteria) in a given amount of iterations. This produces the object-level set of exploratory concepts (in Boden’s sense) which we would call the traditional search results. To get creative results we would need to apply the meta-level search (Boden’s transformational search) with slightly different quality criteria.

Wiggins explained various situations of creativity not taking place (uninspiration and aberration) in terms of his framework as shown below. For example, a system not finding any valuable concepts would be expressed as $[E](U)=0$ (in Wiggins’ original notation). While this approach seems counter-intuitive and impractical, it actually provides an interesting inspiration on how to formulate some of our pataphysical concepts in terms of the CSF (see chapter 13.4).

- Hopeless Uninspiration

- $V_α(X)=∅$ (valued set of concepts is empty)

- Conceptual Uninspiration

- $V_α(N_α(X)) = ∅$ (no accepted concepts are valuable)

- Generative Uninspiration

- $elements(A)=∅$ (set of reachable concepts is empty)

- Aberration

- $B$ is the set of reachable concepts not in $[N]_α(X)$ and $B ≠ ∅$ (search goes outside normal boundaries)

- Perfect Aberration

- $V_α(B)=B$

- Productive Aberration

- $V_α(B)≠∅$ and $V_α(B)≠B$

- Pointless Aberration

- $V_α(B)=∅$

The potential of these definitions of ‘uncreativity’ is further explored in chapter 13.

7.2.6 Individual Criteria

Many separate attempts exist at defining an evaluation model that focuses on a single criterion for creativity.

One such example is a model for evaluating the ‘interestingness’ of computer generated plots (Pérez y Pérez and Ortiz 2013).

Another approach looks at “quantifying surprise by projecting into the future” (Maher, Brady, and Fisher 2013).

Bown looks at “evaluation that is grounded in thinking about interaction design, and inspired by an anthropological understanding of human creative behaviour” (2014). He argues that “systems may only be understood as creative by looking at their interaction with humans using appropriate methodological tools” (2014). He proposed the following methodology.

The recognition and rigorous application of ‘soft science’ methods wherever vague unoperationalised terms and interpretative language is used.

An appropriate model of creativity in culture and art that includes the recognition of humans as ‘porous subjects’, and the significant role played by generative creativity in the dynamics of artistic behaviour.

Others argues that creativity can be measured by looking at the overall ‘unexpectedness’ of an artefact (Kazjon and Maher 2013).

McGregor, Wiggins and Purver introduce the idea of creativity as an “intimation of dualism, with its inherent mental representations, is a thing that typical observers seek when evaluating creativity” (2014).

Another attempt to evaluate computational creativity suggests that systems must go through a sequence of 4 phases “in order to reach a level of creativity acceptable to a set of human judges” (Negrete-Yankelevich and Morales-Zaragoza 2014). The phases are as follows.

Structure is the basic architecture of a piece; it is what allows spectators to make out different parts of it, to analyze it to understand its main organization.

Plot is the specialization scaffold of the structure to one purpose; it is the basis for narrative and the most detailed part of planned structure. It is upon plots that pieces are rendered.

Rendering is a particular way in which the plot was developed and filled with detail in order to be delivered to the audience.

Remediation is the transformation of a creative piece already rendered into another one, re-rendered, possibly into another media.

França et al. propose a system called Regent-Dependent Creativity (RDC) to address the “lack of domain independent metrics” and which combines “the Bayesian Surprise and Synergy to measure novelty and value, respectively ” (2016).

This dependency relationship is defined by a pair $P(r;d)$ associated with a numeric value $v$, where $r$ is the regent (a feature that contributes to describing an artifact), $d$ is the dependent (it can change the state of an attribute), and $v$ is a value that represents the intensity of a specific pair in different contexts.

For example, an artifact car can be described by a pair $p_i(color;blue)$, where blue changes the state of the attribute color. The same artifact could also be described by another pair $p_i(drive; home)$, where the dependent home connects a target to the action drive.

(França et al. 2016)

Velde et al. have broken down creativity into 5 main clusters (2015):

Original (originality)

Emotion (emotional value)

Novelty / innovation (innovative)

Intelligence

Skill (ability)